#Jprofiler 5.0 1 code

The primary difference is the 32/64bit and the amount of RAM available, which I can't replicate very easily, but the code and queries and settings are identical.Ģ) There is some legacy code that relies on JaxB, but in reordering the jobs to try to avoid scheduling conflicts, I have that execution generally eliminated since it runs once a day. Thanks for any and all insight you can provide.ġ) Yes, my development cluster is a mirror of production data, down to the media server. Unfortunately, the problem also pops up sporadically, it seems to be unpredictable, it can run for days or even a week without having any problems, or it can fail 40 times in a day, and the only thing I can seem to catch consistently is that garbage collection is acting up.Ī) Why a JVM is using 8 physical gigs and 2 gb of swap space when it is configured to max out at less than 6.ī) A reference to GC tuning that actually explains or gives reasonable examples of when and what kind of setting to use the advanced collections with.Ĭ) A reference to the most common java memory leaks (i understand unclaimed references, but I mean at the library/framework level, or something more inherenet in data structures, like hashmaps).

#Jprofiler 5.0 1 Offline

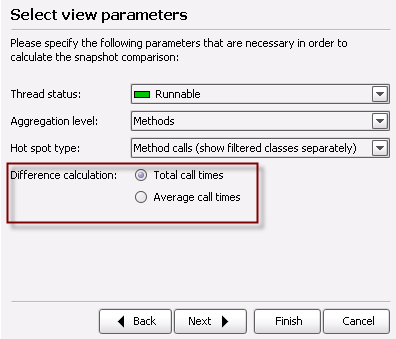

However, running with jprofiler, the "Run GC" button seems to clean up the memory nicely rather than showing an increasing footprint, but since I can not connect jprofiler directly to the production box, and resolving proven hotspots doesnt seem to be working I am left with the voodoo of tuning Garbage Collection blind. The logs show that as the memory usage increases, that is begins to throw cms failures, and kicks back to the original stop-the-world collector, which then seems to not properly collect. We are using the ConcurrentMarkSweep (as noted above) collector because the original STW collector was causing JDBC timeouts and became increasingly slow.

The problem appears to be garbage collection. There is nothing else significant running on this box. This is an issue for the customer facing end, who expects a regular poll (5 minute basis and 1-minute retry), as well for our operations teams, who are constantly notified that a box has become unresponsive and have to restart it. The JVM seems to completely ignore the memory usage settings, fills all memory and becomes unresponsive. In each case, the profiler showed these as taking up huge amounts of resources for one reason or another, and that these were no longer primary resource hogs once the changes went in. I have probed it to death with JProfiler and fixed many performance problems (synchronization issues, precompiling/caching xpath queries, reducing the threadpool, and removing unnecessary hibernate pre-fetching, and overzealous "cache-warming" during processing). The closest I can get to reproducing the problem is a 32-bit machine with lower memory requirements.

0 kommentar(er)

0 kommentar(er)